Table of Contents

Key Information & Summary

- Calibration is the act of ensuring that a scientific process or instrument will produce accurate results every time

- An instrument needs to be properly calibrated before it is used to make sure you obtain accurate results

- There are two main methods of calibration: the working curve method and the standard addition method

- An instrument needs to be calibrated after certain events, such as a knock, power-cut, or when instructed by the manufacturer

What is calibration?

In chemistry, calibration is defined as the act of making sure that a scientific process or instrument will produce results which are accurate. In more complex terms, calibration is the act which determines the functional relationship between measured values and analytical quantities.

Any instrument used in research needs to be properly calibrated to make sure the data it produces is valid and can be used by others. Over time, instruments can 'drift' due to normal wear and tear and can, therefore, give inaccurate results – this is why it’s important that machines are properly calibrated before use.

Why do you calibrate instruments?

Any instrument used in scientific research needs to be properly calibrated before it is used – this is done through adjustment of the precision and accuracy of the instruments. You, therefore, need to know what precision and accuracy mean:

- Precision is how close measurements are to each other

- Accuracy is how close measurements are to the true value

By adjusting these values, instrument calibration can reduce (or completely eliminate) bias in readings.

When do you calibrate instruments?

There are a number of scenarios in which an instrument used for research needs to be calibrated. Just a few are listed below:

- After an 'event' – this could be if the instrument is knocked, bumped, or moved. Any of these things can impact the accuracy of an instrument’s measurements.

- When measurements don’t seem right – if you are conducting research, but the measurements being produced don’t seem right, then the instrument may need to be calibrated again.

- When instructed by the manufacturer – some manufacturers require an instrument to be checked every so often to make sure it is working properly. If so, they will tell you how often this needs to be done.

How do you calibrate an instrument?

There are two main ways of calibrating an instrument – these are the working curve method and the standard addition method.

Working Curve Calibration

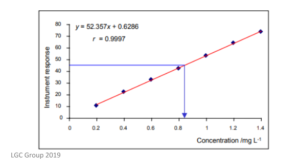

In the working curve method, a set of standards must be prepared. They will each contain a known amount of the analyte being measured. These standards are then measured using the instrument in question, and a calibration curve will be plotted. This curve will show the relationship between the response of the instrument and the concentration of the analyte. An example of a calibration curve can be found below.

When using the working curve method, it is important that each standard is prepared individually and not all from the same stock solution. Any errors in the stock solution will carry through the entire calibration process, and thus the instrument will not be calibrated correctly.

The calibration curve should also be checked for any outliers – this is a measurement which is significantly different from the other measurements. Put simply, these results will shift the regression line (line of best fit) and give inaccurate results, and should, therefore, be removed.

There are a number of steps which should be followed when performing a working curve calibration, which is outlined below:

- The calibration standards should cover the range of interest – this is so, during your actual experiment, you are sure to get the most accurate results from your curve

- A ‘blank’ should be included in your calibration – this is a standard which contains no analyte

- Don’t automatically set your regression line intercept to zero! Only do this if you have enough evidence to show that the intercept point is not actually statistically different from zero.

Standard-Addition Calibration

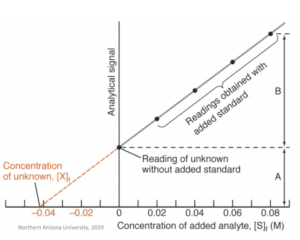

The standard-addition method of calibration helps to remove bias that may arise from a number of factors, including the temperature and composition of the actual matrix.

For the standard-addition method of calibration, two requirements must be met:

- The calibration curve has to be linear

- The regression line must pass through zero

In this method, the signal intensity of the sample solution is measured. Then, the analyte is added to this solution at known concentrations – the signal intensity is measured after each addition of the analyte. This, therefore, gives a calibration curve which is linear and shows signal intensity vs. added concentration. The concentration of the analyte is determined from the point where the regression line crosses the axis at zero.

In this method, the matrix itself remains completely unchanged – it is for this reason that this method is useful in cases where the matrix is either very complicated or hard to reproduce.

References and further readings:

[1]. https://chem.libretexts.org/Bookshelves/Analytical_Chemistry/Supplemental_Modules_(Analytical_Chemistry)/Data_Analysis/Instrument_Calibration_over_a_regime

[2]. https://www.lgcgroup.com/LGCGroup/media/PDFs/Our%20science/NMI%20landing%20page/Publications%20and%20resources/Guides/Calibration-curve-guide.pdf